Photo credit: Ana Laura Farias

I had the pleasure of taking part in the 2018 Machine Learning for Interaction Design summer school program hosted by the Copenhagen Institute of Interaction Design (CIID). The class was taught by Andreas Refsgaard and Gene Kogan at UN City in Copenhagen and lasted one week (July 9 – 13, 2018). During that period, they previewed numerous machine learning tools and programs that could be utilized by interaction designers.

Day 1

On the first day of the class, we were introduced to Wekinator and ml5.js, the former being a client-side application and the latter a JavaScript library. In addition to these two machine learning tools, we were also introduced to Processing, p5.js, and openFrameworks. With these tools under our belt, several lectures, and a helping of Danish dining, we spent some time playing around with Wekintator and Processing, using them to prototype a Yoga Training App. The objective of this short sprint was to prove the utility of machine learning, even in its nascent form. Within 45 minutes, everyone had a working "app" that recognized user positions and confirmed if they were correct yoga poses.

To accomplish this task, which might have taken several days or weeks to mock-up, we simply had to train an adversarial neural network (check if this is correct) on various poses. With the model trained in Wekinator, we could send an OSC signal over to Processing which was used to provide minimal feedback to the user. The takeaway from the first day, was clear: machine learning is an eminently viable tool for designers. Even in its infancy, it can be used to speed up prototyping while also opening doors to voice and image detection.

Training Wekinator on Yoga poses.

Day 2

The second day consisted of a more theoretical approach to machine learning. Gene took the class through high-level explanations of how computers can be taught. In doing so, he went through several of his projects to show various instances of machine learning in action. Before lunchtime, CIID alum and physical computing professor, Bjørn Karmann came in and showed us how he used machine learning in Objectifier, a small AC adapter for making dumb products smart. The point of his experiment was to explore how machine learning could be manifested in the physical world.

After lunch, we were introduced to Runway, a powerful client-side application for machine learning designed by Cris Valenzuela. With HTTP, OSC, and camera input, it is quite powerful. Using Docker, we were able to download several pre-trained models including im2txt, OpenPose, and YOLO. Yet again, a world of interaction design which had once been relegated to advanced developers was now made accessible to anybody with a little bit of curiosity.

We finished out the day by returning to the concept which we had come across on the first day: "It is an [x] that you can control with [y]". For the rest of the day, we explored this concept, using a novel input to control a novel output. What I ended up with was a parametric chair controlled via my gob.

Facial tracking was done in openFrameworks and sent to Wekinator over OSC. Wekinator was trained on a continuous model. Data was then sent to Processsing to control the sketch.

Days 3-5

With two days of training, we were ready to embark upon our first machine learning project. To start the project off right, Andreas had us break into small groups and do a round of 20/10/5 brainstorming (20 sketches + doodles, in 10 minutes, with 5 minutes to share). From there, we had to come up with three more ideas in five minutes. After selecting our two favorite ideas from the group, we came together as a class and shared. From there, we voted on the best ideas and broke into groups based on which idea we wanted to bring to life.

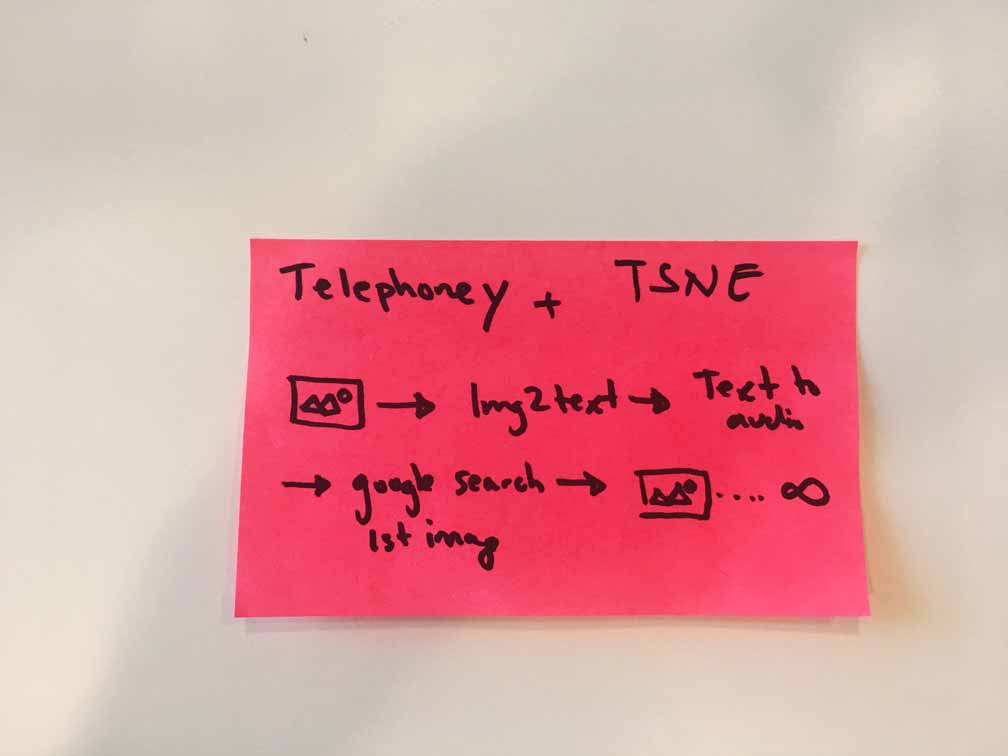

I ended up picking my own idea to prototype. I had come up with the idea after seeing the ridiculous captions that im2txt spit out. I was curious what would happen if you googled the caption and fed the first googled image back into the model. This idea was somewhat similar to Jonathan Chomko's News Machine, however, instead of relying on countless technologies to interpret a given signal, this project would depend entirely on machine learning.

Lars and Mikkel brainstorming.

Goofy Google in its embryonic form.

The following 48 hours were spent hacking through Runway data, web sockets, Google API documentation, and cross origin-errors. Thankfully, I was able to get a viable demo working for Friday. At 1:30 PM, I presented what I dubbed Goofy Google to the entirety of the second week CIID Summer School cohort. To end the week of school, we all went on a canal tour of Copenhagen. It was there that I got the chance to talk with Bjørn who suggested I make Goofy Google into a poster series or a short video montage.

The project as a whole is complete, but it is in need of some clean up before it is ready to live on the web. For more information about the project itself, you can visit the breakdown page or you can check out the source code on GitHub. If you have any questions about my process or the class or need help setting up something similar, feel free to reach out to me.

An infinte loop of machine learning.

A special thanks go out to Gene and Andreas for sharing their knowledge, to CIID for organizing the Summer School experience, and to Cris Valenzuela for having such beautifully documented code.